Making Ona reliable and resilient

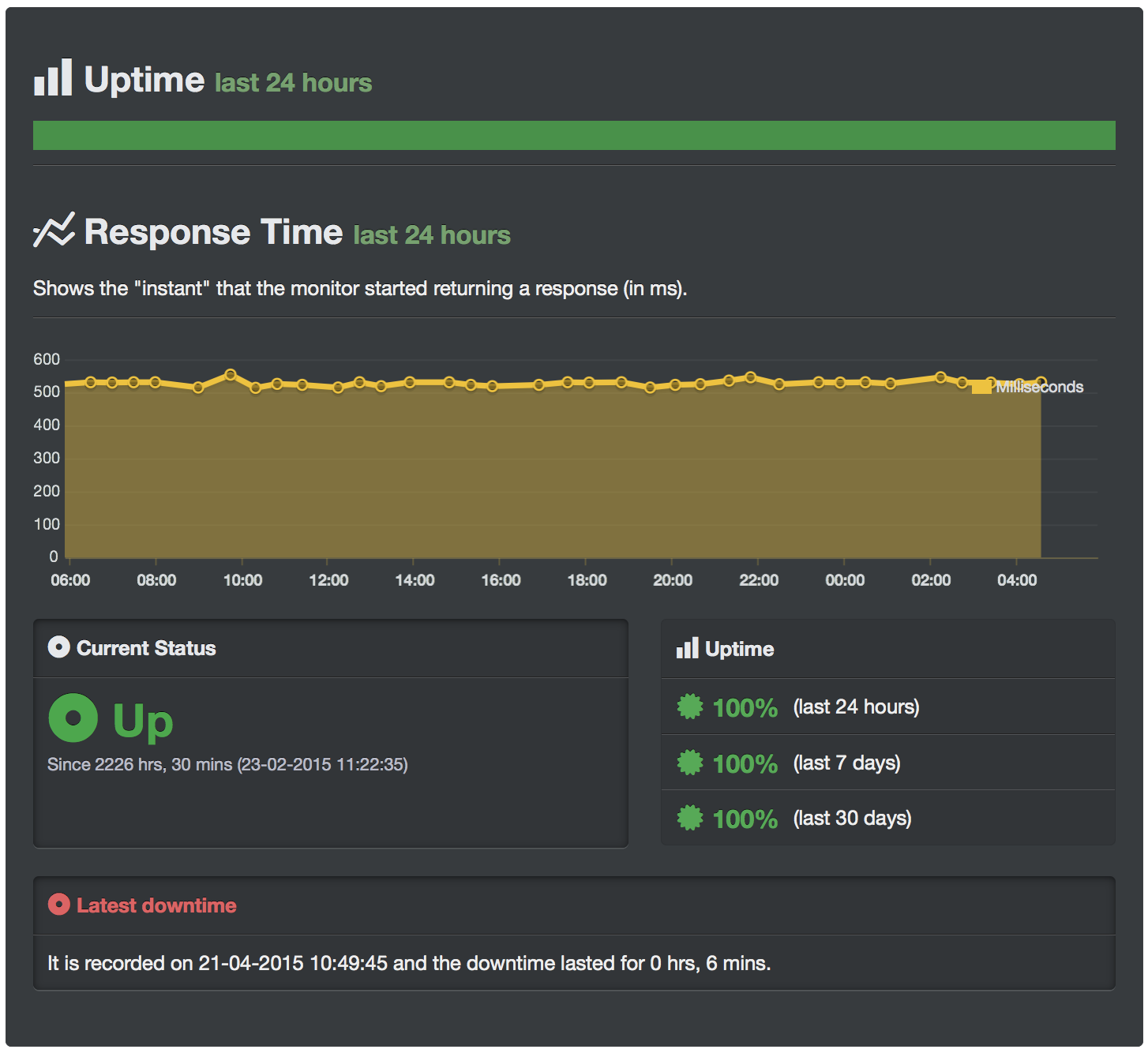

Last year, we experienced a few small periods of downtime. We made changes to bring this up to 99.8% API uptime in 2015. However, the remaining 0.2% bothered our engineering team, so after additional work, we recently hit a rewarding milestone: 30+ days of 100% uptime on our API and front-end site!

The first big change we made was transitioning database hosting to RDS, Amazon’s Relational Database Service. RDS allows us to isolate our database servers from our application servers, and increase the performance and capacity of our database servers separately from that of our application servers.

The second big improvement was adding redundancy to our application servers. We now use Elastic Load Balancing to share requests among multiple highly available application servers.

This gives us a number of advantages:

- Horizontal scaling to accommodate traffic increases (we start new servers as load increases)

- Zero downtime deployment when releasing new features (we update one server at a time)

- Failover in case an individual server stops responding

Load balancing does exactly what you would imagine — it balances the server load amongst a set of servers. Whenever someone makes a request to ona.io, instead of sending this request to one specific server, it goes to an intermediary: the load balancer. The load balancer then determines which amongst a set of servers can respond the fastest, and forwards the request there.

Thanks to continuing efforts our engineering and support teams have put in we now have a faster and more scalable Ona. But, we still have a lot to do. Coming up next, we will be adding a status page to keep users informed about current uptime statistics.